In the last year, Artificial Intelligence (AI) has transitioned from being just a buzzword to becoming a significant part of our lives, impacting various fields including User Experience (UX). This change has brought new tools, methods, and possibilities for conducting research and creating user-centred designs. But the question remains - how are UX professionals incorporating AI into their workflow, and what are their perceptions regarding this burgeoning technology?

I recently conducted a short study with UX and Product professionals to delve deeper into these questions. I decided to split the write-up into two parts to make it easier to read. The first part, published a few weeks ago, focused on AI usage, self-perceived knowledge, and the advantages and concerns associated with incorporating AI at work. The results revealed that a majority of UX professionals are welcoming AI into their professional and personal lives. They acknowledge the potential transformative power of AI, adopting an approach marked by both enthusiasm and caution. A prominent theme that emerged is the need for AI to enhance human potential, not overshadow or replace it, emphasizing the importance of human touch and responsible AI utilization.

This is the second and last part and it will be focused on the attitudes UX professionals have towards AI as well as the relationships between various study variables.

Attitudes Towards AI

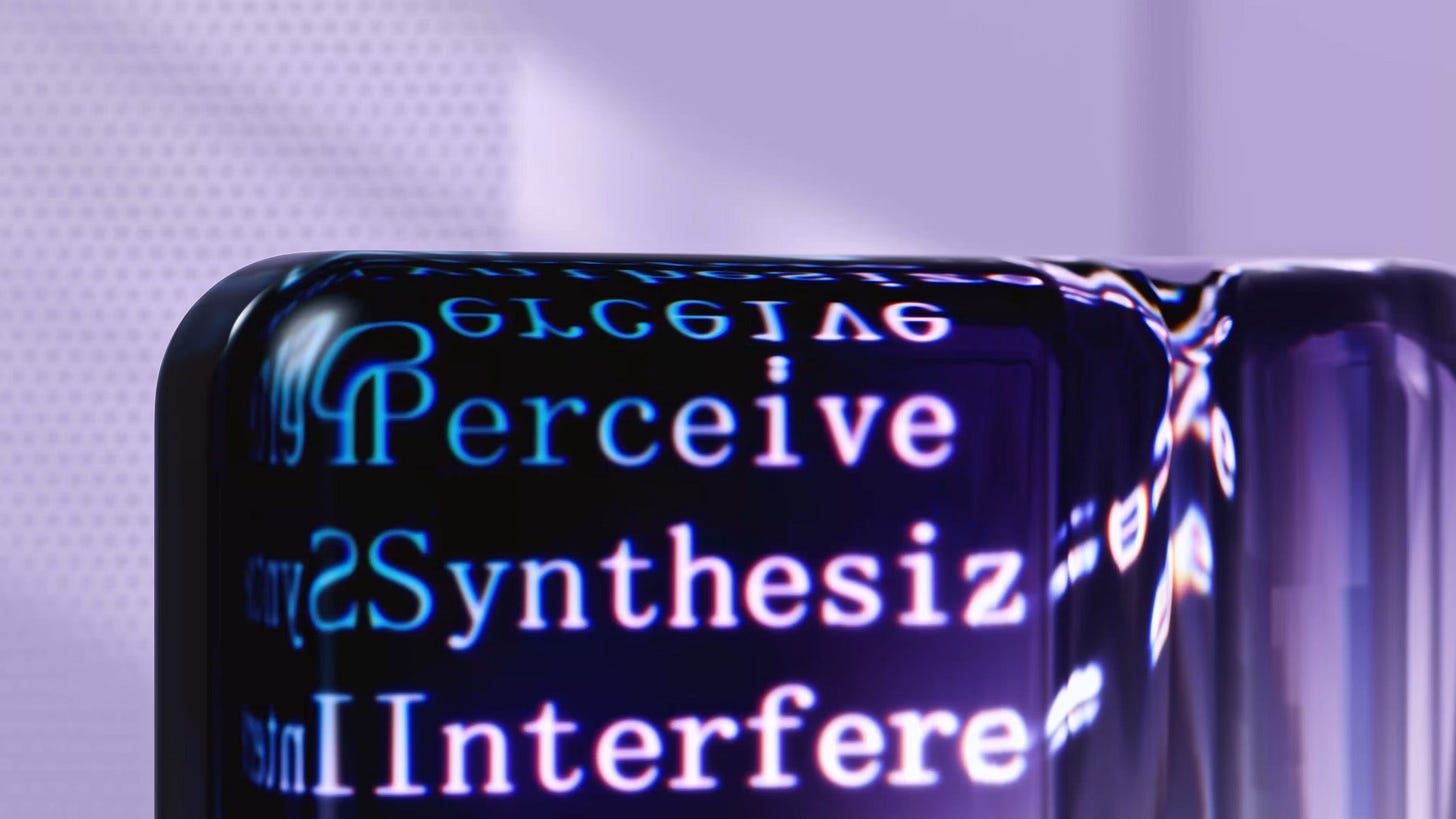

To measure attitudes towards AI, I utilised the General Attitudes towards Artificial Intelligence Scale (GAAIS), a well-acknowledged scale proven effective in previous research. This scale comprises two subscales: the Positive AI Attitudes Score and the Negative AI Attitudes Score. The latter, comprising negative statements about AI, is reverse-scored to also indicate positive attitudes towards AI, with higher scores indicating more favourable perspectives. As advised by the developers of GAAIS, I have refrained from merging the two subscales into one.

A closer look (Figure 1) at the results shows that most respondents have a moderately positive viewpoint towards AI, particularly noted in the Positive AI Attitude Score. The Negative AI Attitude Scores, though leaning towards high scores indicating favourable views about AI, also reveal a spectrum of opinions, with some participants showcasing extremely positive attitudes and others adopting a negative stance.

This data implies that while UX professionals generally have a positive outlook on AI, a degree of caution is still prevalent.

Next, I examined individual responses from the two scales. A significant portion of UX professionals agreed with positive statements from the Positive Attitudes Scale, expressing a willingness to integrate AI into their job roles and acknowledging the potential benefits and impressive capabilities of AI.

In contrast, participants displayed a disagreement with most statements from the Negative Attitude Scale, apart from concerns about AI systems' propensity for errors and the ethical usage of AI by organizations. These attitudes align with the key concerns voiced in the rest of the survey: potential for errors/reliability issues with AI and concerns about unethical AI use.

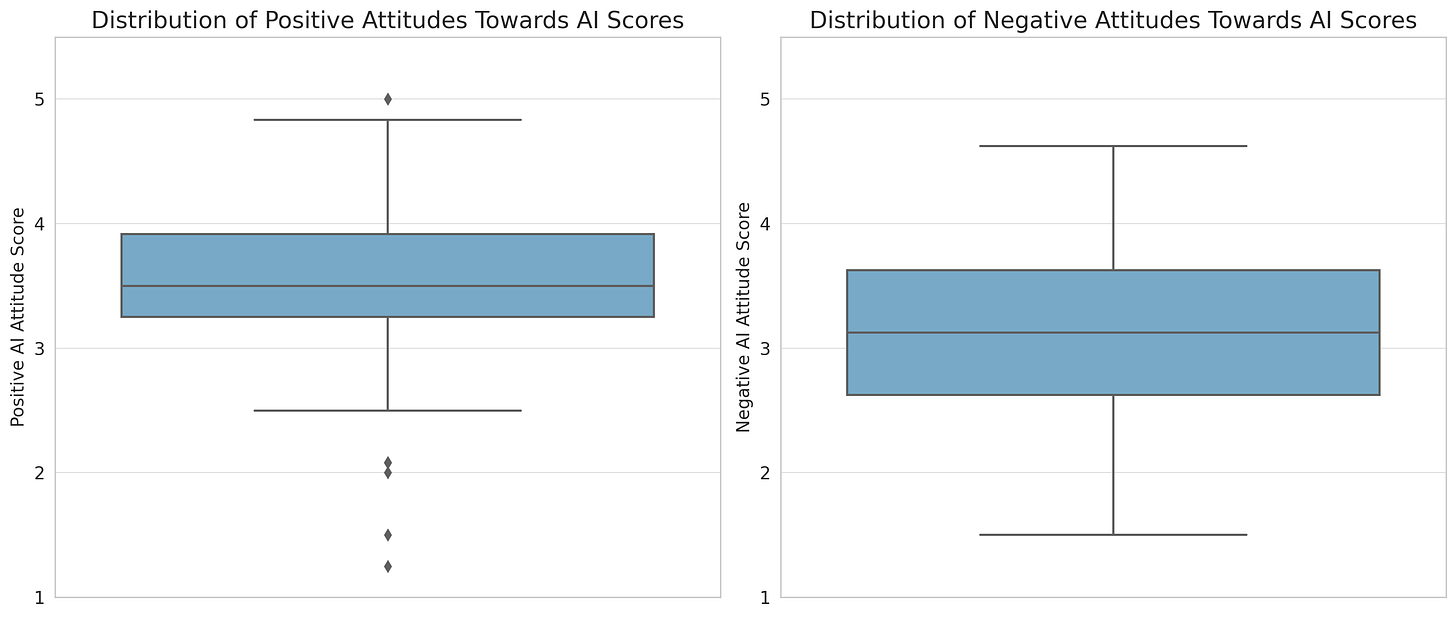

Attitudes towards AI and UX Roles

AI attitudes scores by role were also examined. Roles with only a few data points were collated into the "Other UX or Product Role" category. All roles displayed a mostly positive attitude towards AI, with the "Other UX or Product Role" category showing the strongest positive sentiment. The "UX Researcher" group had the lowest score, but it's still above 3, indicating a somewhat positive sentiment. More research is needed to better understand AI attitudes by different UX roles — the small sample size in this study doesn’t allow us to reach to any conclusions, but it’s something to further explore.

AI Attitudes and AI Tool Usage

How might these attitudes towards AI relate to the usage of AI tools in the workplace? To explore this question, the relationship between the Positive and Negative AI Attitude Scores and the frequency of AI tool usage at work was examined.

A moderate positive correlation was found between Positive AI Attitude and AI Tool Usage at Work(r = 0.5, p < 0.1). A weak but statistically significant positive correlation was also found between Negative AI Attitude and AI Tool Usage at Work (r = 0.3, p <.01). These results suggest that individuals with more positive attitudes towards AI tend to use AI tools more frequently in their work (or it could be the other way round… correlation analysis is limited).

Moreover, a positive statistically significant relationship was observed between AI knowledge and both positive and negative AI attitudes (r= 0.4, p<.01 and r= 0.3, 0<.01 respectively), suggesting that individuals with higher self-reported AI knowledge display more favourable attitudes towards AI. This is aligned with previous research, which suggests that people with a deeper understanding of AI are more likely to use and trust it.

A positive relationship was also found between AI knowledge and AI tool usage at work (r = 0.3, p<.05). This suggests that individuals who rate their AI knowledge higher tend to use AI tools more frequently in their work.

These correlations suggest that attitudes towards AI, whether positive or negative, can play a role in shaping how often UX professionals use AI tools in their work. However, these are observational associations and should not be interpreted as causal relationships. More research beyond the scope of this study is needed.

Discussion and Implications

The findings from this study provide fresh perspectives on the attitudes of UX professionals towards AI. Predominantly, UX professionals exhibit positive attitudes towards this emerging technology.

Interestingly, individuals with higher self-reported AI knowledge tend to perceive AI more favourably, a trend aligned with previous studies (Khare, 2023; Peng, 2020). These studies have noted a tendency for risk perception to decrease as AI knowledge expands, a phenomenon termed 'risk blindness.'This phenomenon was particularly prominent among participants with higher AI knowledge, implying that risk perception reduces as AI knowledge expands. The same was observed in this study with participants with higher levels of perceived AI knowledge exhibiting more positive attitudes towards AI and using it more frequently.

These findings underline the importance of AI education and practical exposure for UX professionals. As the field of UX increasingly intersects with AI, it's crucial that we equip ourselves with the necessary knowledge and hands-on experience. At the same time, it’s worth ensuring safeguards are in place to tackle ‘risk blindness’ that could arise as as AI knowledge and confidence levels increase. It would be beneficial for UX professionals if managers fostered an environment that encourages metacognition, thereby helping users to better understand their own knowledge and its limitations. This could lead to more cautious and informed interactions with AI systems.

It’s worth noting that only perceived AI knowledge was measured. Previous research has shown that most non experts hold relatively little knowledge about AI but tend to overestimate their understanding of the area.

While this study sheds light on some factors influencing attitudes towards AI among UX professionals, it's important to note that these are observational findings and should not be interpreted as causal relationships. Future research could explore these dynamics in more depth, perhaps focusing on how these relationships play out over time or in different contexts. In addition to this, variables like AI knowledge were based on self-reported measures. Previous research has shown that study participants often over-estimate their AI knowledge. Future studies, should include a more objective measure.

Conclusion

To conclude, the current findings indicate a cautiously optimistic approach towards AI integration in the workplace among UX professionals; most are willing to accept AI in their work as long as the appropriate regulations are in place and risks are mitigated. As AI becomes more prevalent, education and hands-on exposure to AI are becoming increasingly critical. There is a pressing need for mechanisms to prevent 'risk blindness' and promote informed interactions with AI systems. Organisations and managers should focus on reducing potential risks while enhancing the understanding and assimilation of new technologies, fostering a more accommodating and responsible environment for AI integration.

In conclusion, this study suggests that UX professionals are cautiously optimistic about the future and mostly willing to accept AI in their work as long as the appropriate regulations are in place and risks are mitigated. Organisations should invest of reducing risk and educating UX professionals on new technologies to address their concerns and increase the acceptance of AI tools.

Great stuff, Maria