Improving Data Quality in Online Studies: What's New?

Ensuring Data Integrity in the Age of AI

Originally published November 2021, updated January 2025

When conducting studies online, whether through surveys or unmoderated usability testing, we face a fundamental challenge: we cannot see our participants or control their environment. This limitation creates several concerns about data quality, and in the years since this article was first published in 2021, these challenges have evolved and expanded in unexpected ways — yes, it’s AI related.

Understanding Core Data Quality Concerns

The most immediate challenge in online research comes from external factors affecting participant performance. In traditional lab-based studies, we can carefully control the environment, ensuring participants work in quiet spaces with minimal distractions and appropriate equipment. Back in my academic research days, we had noise insulated labs and even used noise cancelling headphone to ensure participants are not distracted when taking part in research. Online studies allow us to recruit from all over the world and conduct research faster but, unfortunately, strip away these controls. A participant might complete a crucial survey while watching television, use suboptimal hardware, or struggle with a slow internet connection. These variables can significantly impact task performance and data quality.

Verification presents another significant hurdle. Despite careful screening processes, confirming participants' identities in online studies remains challenging. Participants might misrepresent their age, gender, background, or other demographic information, especially when incentives are involved. This verification challenge becomes particularly acute in paid studies or when specific demographic requirements are crucial to the research outcomes.

Research has consistently shown that engagement levels in online studies tend to be lower than in controlled environments. Approximately 10-12% of participants can be classified as "careless responders" who don't pay sufficient attention to parts of online tasks (Meade & Craig, 2012). This phenomenon introduces noise into the data that can significantly impact research conclusions.

Participant honesty presents yet another dimension of concern. Previous research has identified two primary motivations for dishonesty in online studies: impression management and self-deceptive enhancement. Impression management reflects participants' conscious efforts to control how others perceive them, while self-deceptive enhancement represents an unconscious tendency to present oneself in an overly positive light. These tendencies can manifest in various ways, from using external aids when instructed not to (such as Googling answers) to providing dishonest responses in surveys.

Why Data Quality Matters

Research has shown that even a small number of careless responders can affect the results of a study. According to Huang et al. (2015, p.9) “the presence of 10% (and even 5%) … can cause spurious relationships among otherwise uncorrelated measures”. In UX research, this can lead the team to inaccurate conclusions about the users and their experience and can negatively affect design decisions.

Unfortunately, recognising responses of poor quality is not always straightforward. Even when participants respond to a survey randomly, they still produce some non-random patterns in their data, which means that detecting them requires a lot of effort and a combination of various methods (Curran, 2016).

Traditional Quality Control Methods

A number of techniques have been developed to allow us to check the quality of data obtained in online studies. Some of the most common ones are described below:

Consistency: Measures of internal reliability can indicate inconsistent responses from participants. This is usually achieved by calculating the Chronbach’s alpha score for a questionnaire. Removing participants with inconsistent answers, can improve the internal reliability of a scale. Another way to measure consistency at an individual level is the even-odd consistency score; a separate score is calculated for the odd and even items of a scale for each participant and the relationship between them is examined (within-person correlation). A weak or non-significant correlation suggests poor consistency and could indicate data quality issues.

Speed of responses: This refers to the time it takes for an individual to respond to a number of items, and according to Curran (2016) it is the most widely used tool to eliminate poor quality data. This approach relies on calculating the average time participants spend on specific items (e.g., 2s per item) or on the full survey, and examining outliers (i.e. participants with unusually fast or slow responses).

Accuracy: According to Moss (2019) “correct data are data that accurately measure a construct of interest”. This can be done at a group and at an individual level. At a group level, we examine whether data are related to similar constructs (convergent validity)and not related to dissimilar ones (discriminant validity). For example, learnability scores are expected to be positively related to usability scores. At the individual level, we assess whether people provide consistent responses to similar items. This is one of the reasons questionnaires often have similar questions worded in a different way (e.g., negatively-worded survey items). This allows us to investigate the accuracy of the data by looking at the difference between items measuring the same thing. The smaller the difference, the more accurate the data.

Long-string analysis (response pattern indices): This assesses the number of same answers participants give in sequence (e.g., choosing “I agree” in most statements). According to Schroeder et al. (2021) “…the assumption is that those individuals who are responding carelessly may do so by choosing the same response option to every question”. As a result, long-string analysis can help us remove “some of the worst of the worst responders” (Curran, 2015). You can find more information about how to conduct this analysis in Excel here. It is common to exclude participants who might select the same answer for equal or greater than half the length of the total scale.

Look for outliers: Outliers are data points that differ significantly from other observations. Outliers can have multiple causes and careless responders are one of them (Curran, 2015). Some ways we can detect and deal with outliers are discussed in this article by Santoyo.

The AI Challenge: A New Frontier in Data Quality

The state of things in online research has shifted dramatically since 2021 with the widespread adoption of AI language models. Recent research by Zhang and colleagues (2023) revealed that approximately 34% of participants in online research platforms now use AI tools to assist in answering open-ended questions. This development introduces an entirely new dimension to data quality concerns.

AI-assisted responses present a unique challenge because they often appear authentic while lacking genuine personal insight. These responses tend to show greater homogeneity across participants, typically employing more neutral language and abstract concepts compared to the concrete, personal expressions characteristic of human responses. This homogenisation effect can mask important social variations that researchers aim to study, particularly in research about sensitive topics or group perceptions.

The impact of AI assistance shows clear demographic patterns, with higher usage among certain groups, including college-educated participants and those newer to research platforms. This creates potential sampling biases that researchers must consider in their analysis.

What Can We Do?

The evolution of data quality challenges requires a comprehensive approach that combines traditional methods with new strategies. To truly ensure data quality, researchers must implement preventive measures before data collection begins. These established methods, when combined thoughtfully, create a robust foundation for quality control.

Here are the key strategies researchers can employ:

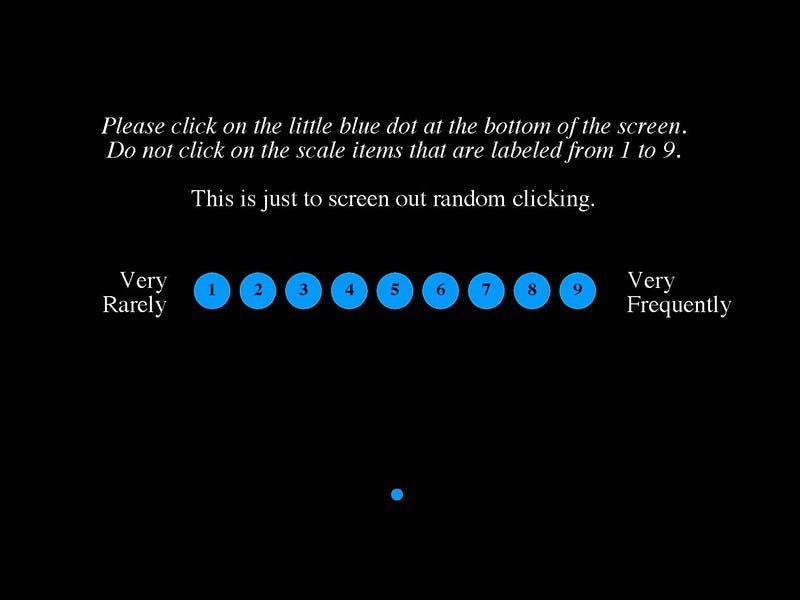

Attention Checks: These carefully designed items within questionnaires help researchers assess participant engagement throughout the study. As Curran (2015) explains, these checks allow researchers to make informed decisions about data quality based on specific responses. A common implementation involves direct instructions, such as "Please select Moderately Inaccurate for this item." Participants who follow these instructions demonstrate their attention to the task, while those who select other options may be flagging their responses as potentially problematic. Researchers have developed creative variations, including bogus items (such as "My main interests are coin collecting and interpretive dancing") and instructional manipulation checks that require participants to demonstrate their comprehension through specific actions.

An example of an instructional manipulation check (creator: Lambiam) Bot Detection: As automated responses become increasingly sophisticated, bot detection has become crucial for maintaining data quality. Researchers can implement various technical solutions, such as honeypots or CAPTCHAs, to prevent automated programs from contaminating their data. These measures help ensure that responses come from real human participants rather than automated systems.

Participant Screening: Writing effective screening questions requires careful consideration and strategic thinking. The process involves more than simply filtering participants; it requires creating questions that accurately identify suitable participants while discouraging misrepresentation. The effectiveness of screening questions can significantly impact the overall quality of research data.

Seriousness Checks: This deceptively simple measure, introduced by Musch and Klauer (2002), directly asks participants to indicate the seriousness of their responses. While this approach might seem overly straightforward, research has demonstrated its effectiveness. Aust et al. (2013) found that participants who reported higher levels of seriousness provided more consistent and predictively valid responses across attitudinal and behavioural questions.

High-Quality Participant Pools: The source of participants plays a crucial role in data quality. Research platforms vary significantly in the quality of data they produce. Platforms like MTurk, CloudResearch, Prolific, and Qualtrics each have their strengths and weaknesses. One particularly interesting finding reveals that participants who use these platforms as their primary income source but spend minimal time on them tend to produce lower-quality data. This insight emphasises the importance of carefully selecting recruitment platforms based on research needs.

To address AI-specific challenges, researchers have begun implementing more sophisticated approaches that go beyond traditional quality control measures. One particularly promising strategy is the concept of "measured friction" - deliberately designed obstacles that make it more challenging to use AI tools without significantly impacting genuine human participants.

Consider a researcher studying customer experiences with a new product. Rather than simply asking "What was your experience with the product?", they might implement a staged response system where participants first describe their initial impression, then wait 30 seconds before a new text box appears asking about specific features they used, followed by another brief pause before questions about emotional response. This approach makes it more difficult to use AI to generate all responses at once while actually improving the quality of human responses by encouraging more thoughtful reflection.

Multi-modal data collection has emerged as another powerful tool for ensuring response authenticity. This approach combines different types of data collection methods within the same study. For example, a UX researcher investigating mobile app usability might ask participants to:

Complete a written survey about their experience

Record a short voice memo describing their most frustrating moment

Upload a screenshot showing their favourite feature

Provide a brief video demonstration of how they complete a specific task

This combination of methods makes it significantly more challenging to use AI tools for response generation while also providing richer, more nuanced data. The varying formats help researchers triangulate findings and verify consistency across different modes of expression. For instance, if a participant's written responses about app navigation differ markedly from their recorded demonstration, this might flag potential quality issues.

Research platforms are beginning to integrate these approaches into their infrastructure. Some now offer built-in tools for collecting voice responses alongside traditional survey questions, or provide mechanisms for participants to easily upload short video clips. These technological advances make multi-modal data collection more feasible for researchers working with limited resources.

Clear communication about AI usage guidelines has also evolved beyond simple prohibitions. Forward-thinking researchers now develop nuanced policies that recognise the reality of AI tools while preserving research integrity. For instance, some studies now explicitly state that while using AI for language assistance (such as grammar checking or translation) is acceptable, using it to generate complete responses is not. These policies often include examples of acceptable and unacceptable AI use, making expectations clearer for participants.

However, it's crucial to understand that no single technique can ensure optimal data quality. Recent research has shown that relying too heavily on any one method—even well-established ones like attention checks—can potentially introduce bias into a study. For example, a participant might respond randomly to most items yet still pass certain quality checks, while another participant giving thoughtful responses might be flagged by some measures due to response patterns that appear suspicious out of context.

As P. G. Curran notes,

"The strongest use of these methods is to use them in concert; to balance the weaknesses of each technique and not simply eliminate individuals based on benchmarks that the researcher does not fully understand or cannot adequately defend."

This insight remains particularly relevant as we face new challenges in the age of AI-assisted responses.

Looking Forward

As we move into 2025 and beyond, ensuring data quality in online studies will require increasingly sophisticated approaches. The future likely lies in developing more advanced AI detection tools, creating hybrid research methodologies, and implementing new verification technologies. However, the fundamental principle remains unchanged: no single method can ensure perfect data quality. Success requires a thoughtful combination of traditional quality control measures and new strategies, applied with an understanding of both human psychology and technological capabilities.

Note: This article has been updated from its original 2021 version to reflect new challenges and solutions in online research, particularly regarding AI-assisted responses.

Very informative article, this will help me to articulate my concerns about overly relying on online user research or usability testing. Thank you Maria for sharing. I’m curious about your take on using synthetic users in research. Any thoughts on that?