The Future of Design? Using AI to Enhance Human Cognition and Creativity

New promising research combining biofeedback tools and AI to improve designers' metacognitive skills

As UX professionals, we often rely on metacognition - thinking about our own thought processes - to critically evaluate our designs, identify knowledge gaps, and adapt our creative approach. This article explores how monitoring our metacognitive activities, like assessing emotional responses, can help navigate design uncertainties and foster innovation.

We will focus on recent research introducing the "Multi-Self" tool, a novel development from Cornell University, which provides real-time biofeedback about designers' emotional states from neural data. By externalising this typically internal information, Multi-Self aims to stimulate valuable self-reflection and expand creative exploration. Even though this approach is still novel and experimental, this research highlights ways we can incorporate advancing technology such as AI and biometrics to enhance our skills and abilities in UX.

Metacognitive Monitoring in Design

Design is an iterative process that involves going back and forth between exploring different options (divergent thinking) and evaluating/synthesising those ideas (convergent thinking). During the exploration phase, designers can feel uncertain about whether their ideas will actually work or be successful (Cross, 2001).

It's important for designers to keep uncertainty at a productive level; too much uncertainty can lead to indecision and lack of progress while too little uncertainty can lead to only superficially exploring ideas rather than deeply questioning assumptions (Ball & Christensen, 2019).

One of the cognitive process that plays a key role in this is metacognition. Metacognition refers to the process of "thinking about thinking" and regulating one's cognitive processes. Metacognitive monitoring specifically involves assessing one's own knowledge, thoughts, and progress on a task. For designers, metacognition helps them regulate uncertainty and keep it in an optimal zone for creativity. It can help in evaluating different approaches, identifying knowledge gaps, managing uncertainty and generating more creative solutions (Cross, 2004).

Several studies demonstrate strong links between metacognitive skills and positive design outcomes. For example, a recent study by Kavousi et al. (2020) found that prompting design students to engage in structured metacognitive activities improved their ability to evaluate concepts and make informed decisions. On the other hand lapses in metacognitive monitoring have been linked to design fixation, where creators prematurely commit to a limited set of ideas (Crilly & Cardoso, 2017). This can negatively affect creativity.

An important part of the metacognitive monitoring process is monitoring your emotional reactions to design ideas and options. Emotions give us clues that can indicate levels of confidence, inspiration, and subjective uncertainty (Taura & Nagai, 2017). Monitoring emotions accurately during design, however, can be challenging.

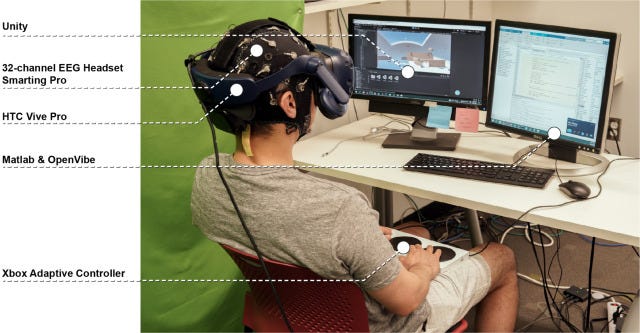

Recently researchers from the Cornell University developed a novel Brain–computer interface (BCI)-VR tool called "Multi-Self" to address this. The tool aims to support metacognition by providing designers with real-time feedback about their emotions.

What is the Multi-Self?

The Multi-Self tool uses electroencephalography (EEG) sensors to detect brain activity associated with emotional responses. EEG involves placing electrodes on the scalp to measure electrical signals produced by brain cell firings. The tool applies machine learning to the raw EEG data to predict the user's levels of emotional valence (positive vs negative feelings) and arousal (excitement/engagement).

The goal of the Multi-Self tool is to help designers better perceive their emotions in the moment by using AI and biosensors. Using machine learning to EEG data it detects signals related to how positive/excited (emotional valence and arousal) the designer feels and visualises this as real-time feedback during virtual design sessions.

More specifically as the designer interacts with a VR environment to create interior designs for an architectural lobby space, they see a moving dot plotted on a 2D graph overlaid in their field of view. The dot's position indicates their predicted emotions, allowing them to track changes as they manipulate the virtual design.

This is intended to help designers see their reactions more objectively from a "third person" point of view. By enhancing awareness of emotions, the tool aims to help designers find the optimal level of uncertainty to enable creative exploration rather than fixation or paralysis.

Testing The Concept

The researchers assessed Multi-Self's feasibility by testing it with 24 participants split between novice and expert designers - 10 experts with over 3 years of architectural design practice, and 14 novices with less experience. Their baseline emotional responses were first assessed by having them view panoramic 360-degree images of interior architectural spaces while EEG was recorded. They then self-reported their felt valence and arousal using visual scales.

This data was used to train personalised machine learning classification models to predict high, moderate, or low levels of valence and arousal from subsequent EEG signals for each participant. Participants then entered an immersive VR design environment where they could manipulate variables like wall shapes, furniture layouts, lighting fixtures and colour schemes to create custom lobby designs. While designing, the Multi-Self tool displayed its real-time predictions about the participant's emotional state of valence (positive to negative) and arousal (excited to calm).

Two methods were used to test the tool's accuracy in assessing emotions. First, after each design change, participants were prompted by the system about whether its current prediction matched their own felt experience (i.e. "Do you agree the system thinks you feel excited about this design change?"). Second, at other points they were asked to self-report their valence and arousal levels which could then be compared to the tool's unseen simultaneous predictions.

The EEG-based classifier achieved 49-52% accuracy in correctly predicting whether participants felt high, medium or low levels of emotional valence (positivity/negativity) and arousal (excitement/calmness) while engaged in the open-ended lobby design task. Though moderate, this represented an improvement over chance-level predictions and showed the potential to detect subtle affective shifts during typical design activities.

After completing the task, user attitudes and experiences were assessed through semi-structured interviews. Response themes highlighted several key individual differences in the way designers used the tool:

Some participants treated the AI feedback as an important decision-making guide, iterating designs to achieve more positive/calm predictions

Others used it selectively to gain confidence by confirming gut feelings about preferred designs

A few checked it only when indecisive between multiple options

Another difference was observed between novices and experts. In particular, novices relied on the tool more and many playfully explored to see how the AI assessments changed. On the other hand, most experts discounted or completely ignored the feedback, feeling they could self-assess accurately.

Many participants found visualising unconscious emotional responses helped them pause and engage in self-reflection. Mismatches between their perceived emotions and the AI’s evaluation prompted participants to reevaluate immediate preferences and discover unconventional ideas. However, in some cases perceived inaccuracies led to skepticism.

Implications for Design

This study uncovers both the exciting potential and challenges of applying AI and physiological sensing to enhance metacognitive processes during creative work like design. Several implications arise for UX professionals and users of creativity support tools:

Providing real-time visual feedback about users' unconscious emotional states helped externalise internal reactions and stimulate self-reflection. For many, this visualisation prompted them to question gut instincts, adjust preferences, and explore a wider range of ideas. This shows how biometric data and AI can enable designers to more consciously track emotional responses over time and improve their work.

While some biofeedback mismatch introduced helpful uncertainty that expanded the solution space, too much perceived inaccuracy led to skepticism and disengagement. This is a limitation of the existing technology that could be addressed in future iterations.

Expert and novice designers exhibited very different attitudes towards the AI-based evaluations. More experienced creators tended to ignore or resist feedback due to confidence in their own judgments. In contrast, many novices found the external input helpful for guidance and validation. This highlights the need for adaptive or personalised metacognitive support tools to account for individual differences.

Human-AI co-design is slowly becoming a reality. Even though the existing tools are still experimental and under development, this study shows a glimpse of what future human-machine collaboration could look like and how it could be used to enhance creativity.

This research lays promising groundwork - but realising the full potential of AI-augmented metacognitive support in UX design remains an extensive challenge requiring interdisciplinary collaboration and further research. For example, this study only looked at short-term usage of the interface and a non diverse sample. Keep an eye on this space though as the future of design is here!

I’d love to hear your thoughts on this! Would you be open to using tools like the Multi-Self to enhance your design process, problem solving, or creativity? What are your main concerns, if any?