Do Users Prefer Chatbots Over Humans? It Depends...

Context-Driven Chatbot Design for Enhanced User Experience

Customer service interactions are increasingly relying on chatbots. Most providers are exploiting new AI advancements and replacing human workforce with chatbots to reduce costs. But what is the impact of this on the user experience? And when, if ever, do users prefer interacting with a chatbot over a human?

While prior research has generally shown that people tend to respond negatively to chatbots compared to human service (Castelo et al., 2023; Luo et al., 2019), a new study by Jin, Walker & Reczek (2024) reveals that in contexts where consumers' self-presentation concerns are high, such as when shopping for embarrassing products, clearly identified chatbots can actually lead to more positive consumer responses than human agents. Let's take a closer look at what existing research tells us, the fascinating new findings from this study, and key takeaways for UX and CX professionals.

A Quick Look at the Literature

Much of the existing research on consumer reactions to AI service agents has focused on the concept of "algorithm aversion," which suggests that people often prefer human judgment over algorithms, even when algorithms outperform humans (Dietvorst et al., 2015). In the context of consumer behaviour, this has been extended to suggest that consumers may prefer interacting with human service providers over automated agents like chatbots (Burton et al., 2020).

However, a closer look at the literature reveals mixed findings on user preferences for human versus bot agents. While some studies do find users reacting more positively to human service (Luo et al. 2019), others show the opposite pattern, with consumers preferring bots in certain contexts (Hoorn and Winter 2018; Yueh et al. 2020). Still other research observes ambivalent reactions, with consumers expressing both positive and negative sentiments toward bots (Desideri et al. 2019).

These mixed findings suggest that user preferences for humans versus bots are highly context-dependent. Factors such as the type of task being automated appear to play a key role in shaping these preferences (Castelo et al., 2019). For tasks seen as more subjective or requiring empathy, consumers may favour human providers, while for tasks perceived as more objective or analytical, bots may be preferred.

Research has showed that robots are preferred when discussing negative experiences (Uchida et al., 2017) or embarrassing medical symptoms (Pitardi et al., 2021). Another relevant study further supporting this was a field experiment by Luo et al. (2019) testing chatbot effectiveness in sales. They found that chatbots could close sales as effectively as human agents, but only when consumers were unaware that the bot was not human. When bot identity was disclosed, sales performance declined.

However, the approach of concealing bot identity proposed by Luo et al. violates emerging regulations aimed at increasing transparency around bot interactions so it is not an option for most companies employing chatbots. Companies need to disclose bot identity and allow users to opt out of such interactions or face legal consequences.

New Insights Into Chatbot Interaction

Jin et al. (2024) conducted 5 studies examining how self-presentation concerns affect interactions with chatbots when the bot's identity is either undisclosed and ambiguous, or clearly revealed to the consumer upfront.

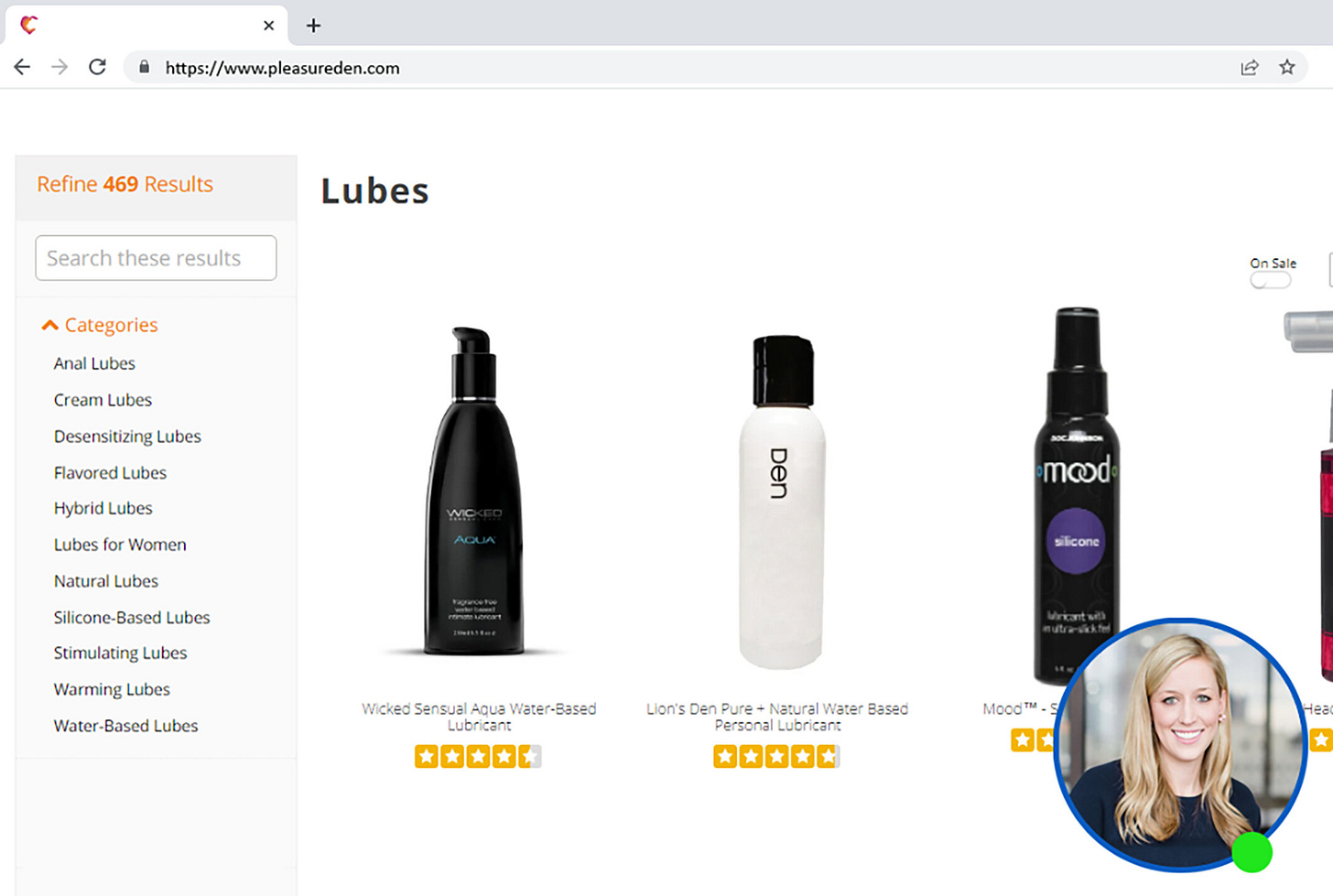

In the first two studies, when a chatbot's identity was ambiguous, participants shopping for embarrassing products (e.g. lube, anti-diarrhoea medicine) were more likely to infer the agent was human. The researchers explained this is because mistakenly thinking the agent is human when it's actually a bot allows the consumer to mentally prepare for potential embarrassment, which is a less costly error than thinking it's a bot but then discovering it's human.

However, follow up studies found that when chatbot identity was clearly disclosed, consumers responded more positively (e.g. greater willingness to interact) to chatbots than human agents for embarrassing products. This preference for bots was driven by consumers perceiving chatbots to have less ability to feel emotions and consciously judge them, leading to reduced embarrassment.

Interestingly, anthropomorphising chatbots by giving them humanlike features attenuated this effect, as it made even disclosed bots seem more able to feel and judge. Having higher individual levels of self-presentation concern also led consumers to ascribe more sentience to anthropomorphic chatbots.

Implications for UX

These findings have important implications for the design of chatbot interactions, especially in contexts that may trigger self-consciousness or embarrassment for consumers:

Clearly identify chatbots in sensitive contexts: While ambiguity about bot identity may be fine for neutral interactions, when embarrassment is a risk, knowing it's a bot can help consumers open up.

Avoid over-anthropomorphising chatbots in sensitive context: Making disclosed chatbots seem too human can undermine their advantage in reducing embarrassment. Minimal humanisation is likely optimal in such cases.

Contextual awareness: While it's clear that medical issues or sexual products may trigger self-consciousness, UX professionals should look for embarrassment potential in a wide range of domains. Lacking knowledge on a stereotypically gendered topic (like women and car repair), for instance, could be embarrassing enough to impact agent preferences.

Conduct thorough user research: Engage in comprehensive user research to better understand potential users and the context of their interactions with AI.

Customise interactions based on context: Always consider the context of user interactions when deciding whether to employ a chatbot or provide the option of a human advisor. There is no one-size-fits-all solution. Conduct user research to better determine the best approach.

In conclusion, the integration of chatbots in customer service offers both challenges and opportunities for enhancing user experience. Understanding the nuanced preferences of users, especially in contexts where self-presentation concerns are heightened, is crucial. We must leverage these insights to design chatbot interactions that are transparent and contextually aware.

The Jin et al. (2024) study appears to be incorrectly linked. Please share documentation of the Jin et al. (2024) study.